Pinecone

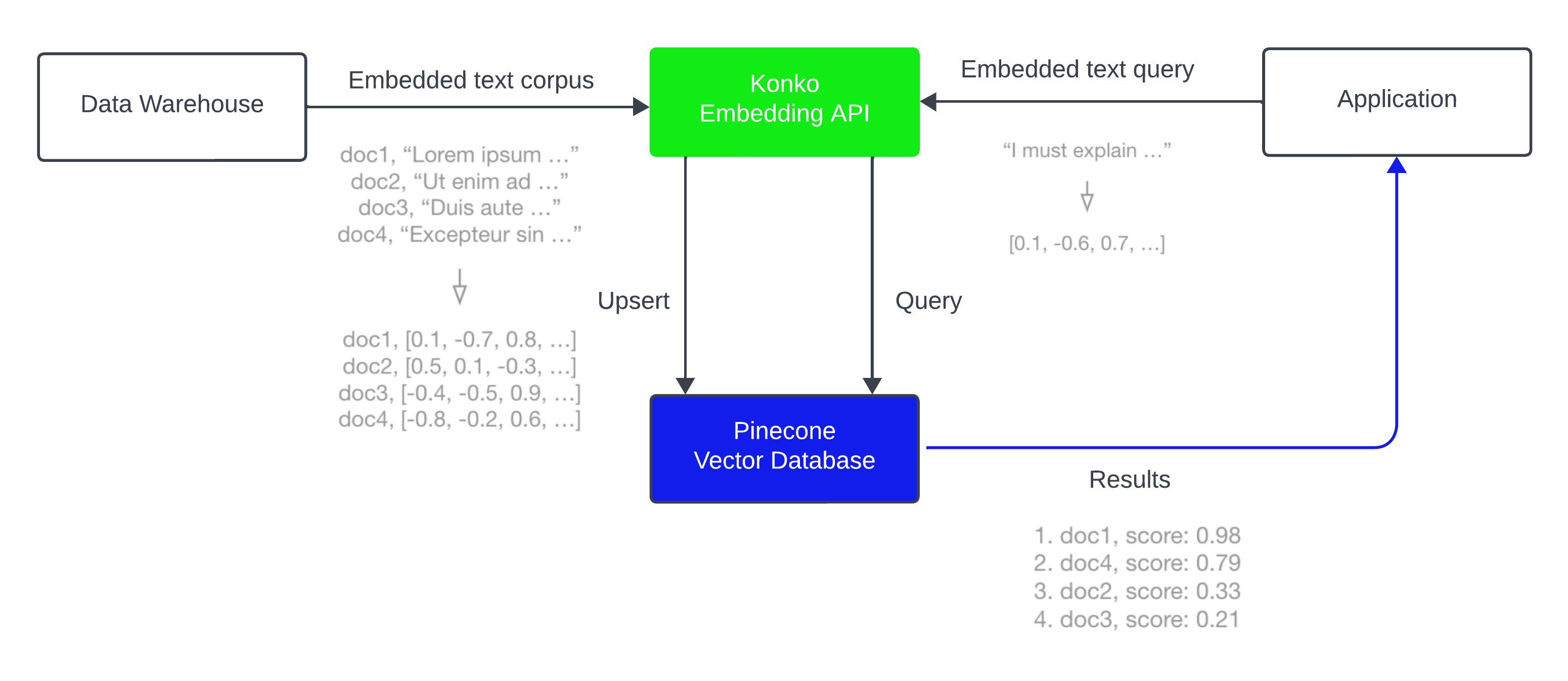

In this guide you will learn how to use the Konko Embeddings API endpoint to generate language embeddings, and then index those embeddings in the Pinecone vector database for fast and scalable vector search.

This is a powerful and common combination for building semantic search, question-answering, threat-detection, and other applications that rely on NLP and search over a large corpus of text data.

Here's a simple breakdown of the process:

- Embedding and Indexing

- Utilize the Konko Embeddings API endpoint to generate vector embeddings for your textual content.

- Upload those vector embeddings into Pinecone, which can store and index billions of these vector embeddings, and search through them at ultra-low latencies.

- Search

- Pass your query text or document through the Konko Embeddings API endpoint again.

- Convert the obtained vector embedding into a query and forward it to Pinecone.

- Receive documents that relate semantically, regardless of direct keyword matches with the input query.

Environment Setup

We start by installing the Konko and Pinecone clients, we will also need HuggingFace Datasets for downloading the TREC dataset that we will use in this guide.

pip install -U konko pinecone-client datasets

Creating Embeddings

Initializing Konko

To create embeddings we must first initialize our connection to Konko Embeddings. Follow our Setup Access & SDK to install and set up Konko Python SDK.

import konko

konko.set_api_key('YOUR_API_KEY')

konko.set_openai_api_key('YOUR_OPENAI_API_KEY')

Initializing a Pinecone Index

Next, we initialize an index to store the vector embeddings. For this we need a Pinecone API key, sign up for one here.

We first initialize our connection to Pinecone, and then create a new index for storing the embeddings (we will call it "konko-pinecone-trec").

The konko.Model.list() function should return a list of models that we can use. We will use OpenAI's Ada 002 model(1536-dimensional embeddings).

import pinecone

# initialize connection to pinecone (get API key at app.pinecone.io)

pinecone.init(

api_key="YOUR_API_KEY",

environment="YOUR_ENV" # find next to API key in console

)

# check if 'konko' index already exists (only create index if not)

if 'konko-pinecone-trec' not in pinecone.list_indexes():

pinecone.create_index('konko-pinecone-trec', dimension=1536)

# connect to index

index = pinecone.Index('konko-pinecone-trec')

Populating the Index

We will load the Text REtrieval Conference (TREC) question classification dataset which contains 5.5K labeled questions. We will take the first 1K samples for this walkthrough, but this can be efficiently scaled to billions of samples.

from datasets import load_dataset

# load the first 1K rows of the TREC dataset

trec = load_dataset('trec', split='train[:1000]')

Then we create a vector embedding for each question using Konko, and upsert the ID, vector embedding, and original text for each phrase to Pinecone.

High-cardinality metadata values (like the unique text values we use here) can reduce the number of vectors that fit on a single pod. See Limits for more.

MODEL = "text-embedding-ada-002"

from tqdm.auto import tqdm # this is our progress bar

batch_size = 32 # process everything in batches of 32

for i in tqdm(range(0, len(trec['text']), batch_size)):

# set end position of batch

i_end = min(i+batch_size, len(trec['text']))

# get batch of lines and IDs

lines_batch = trec['text'][i: i+batch_size]

ids_batch = [str(n) for n in range(i, i_end)]

# create embeddings

res = konko.Embedding.create(input=lines_batch, model=MODEL)

embeds = [record['embedding'] for record in res['data']]

# prep metadata and upsert batch

meta = [{'text': line} for line in lines_batch]

to_upsert = zip(ids_batch, embeds, meta)

# upsert to Pinecone

index.upsert(vectors=list(to_upsert))

Querying

Now that we have our indexed vectors we can perform a few search queries. When searching we will first embed our query using Konko, and then search using the returned vector in Pinecone.

query = "What caused the 1929 Great Depression?"

xq = konko.Embedding.create(input=query, model=MODEL)['data'][0]['embedding']

Now we query.

res = index.query([xq], top_k=5, include_metadata=True)

The response from Pinecone includes our original text in the metadata field, let's print out the top_k most similar questions and their respective similarity scores.

for match in res['matches']:

print(f"{match['score']:.2f}: {match['metadata']['text']}")

0.92: Why did the world enter a global depression in 1929 ?

0.87: When was `` the Great Depression '' ?

0.81: What crop failure caused the Irish Famine ?

0.80: What historical event happened in Dogtown in 1899 ?

0.79: What caused the Lynmouth floods ?

It's clear from this example that the semantic search pipeline is clearly able to identify the meaning between each of our queries. Using these embeddings with Pinecone allows us to return the most semantically similar questions from the already indexed TREC dataset.

Updated 10 months ago